large language model

This article is a stub. You can help the IndieWeb wiki by expanding it with relevant information.

A large language model is (AKA LLM) usually a reference to a service, like OpenAI’s ChatGPT, that synthesizes text based on a massive set of prose typically crawled and indexed from the open web and other sources and should not be used to contribute content to the IndieWeb wiki; several IndieWeb sites disclaim any use thereof for their content.

Why

This section is a stub. You can help the IndieWeb wiki by expanding it.

Why disclaim

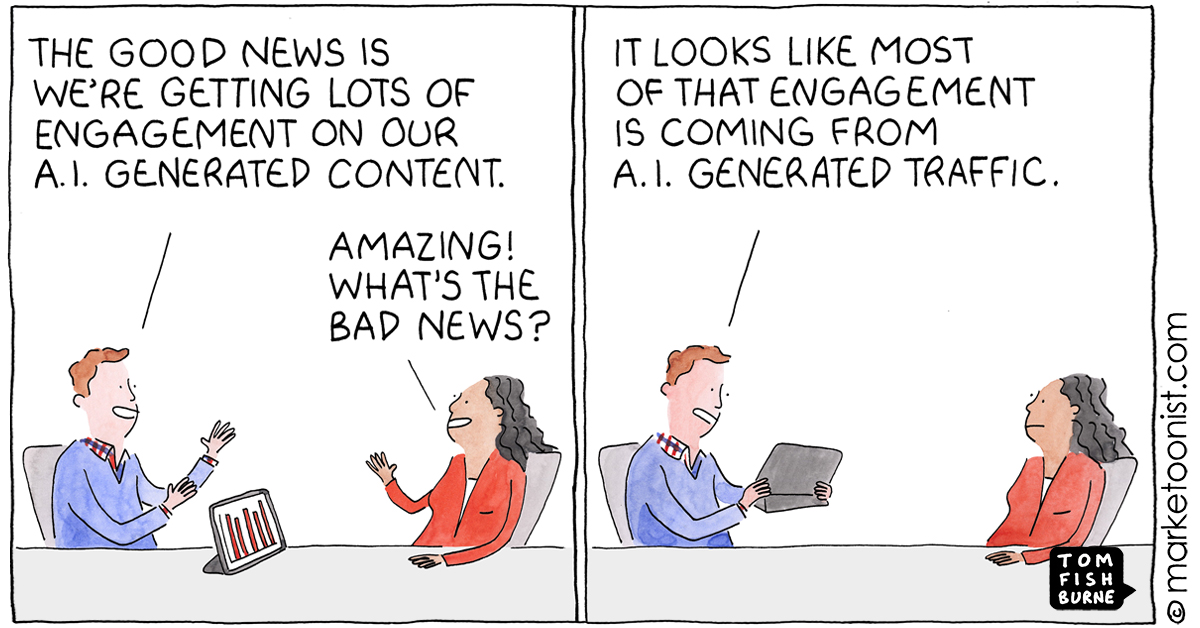

With more and more LLM-generated content being published to the web, e.g. by news outlets like Hoodline, people will increasingly look for actual human-written content instead, and disclaiming your use of LLMs will appeal to a larger and larger audience.

How to

This section is a stub. You can help the IndieWeb wiki by expanding it.

Understanding and managing LLM traffic

See large language model traffic

Promote your work as human created

You can add a Not By AI badge on your website. See some IndieWeb Examples below.

IndieWeb Examples

In rough date order of adding to personal sites:

- Hidde de Vries added a ‘no LLMs involved’ note to the site-wide footer of hidde.blog from 17 March 2023

No language models were involved in writing the blog posts on here.

capjamesg since at least 2023-07-14(?) added a "Not By AI" image button on all his blog posts going back to at least 2020

capjamesg since at least 2023-07-14(?) added a "Not By AI" image button on all his blog posts going back to at least 2020

Aaron Parecki put a "Not by AI" badge in his global website footer (e.g. bottom of https://aaronparecki.com/) next to the IndieWeb/Microformats/Webmention buttons on 2023-10-15.

Aaron Parecki put a "Not by AI" badge in his global website footer (e.g. bottom of https://aaronparecki.com/) next to the IndieWeb/Microformats/Webmention buttons on 2023-10-15.

Tantek Çelik put a general disclaimer about no use of LLMs for his site on his homepage on 2023-12-31:

Tantek Çelik put a general disclaimer about no use of LLMs for his site on his homepage on 2023-12-31:- https://tantek.com/#disclaimer

No large language models were used in the production of this site. (inspired by RFC 9518 Appendix A ¶ 4)

Paul Watson put a general disclaimer about no use of LLMs for his site on his blog homepage on 2024-01-04 and also a similar statement and button (by https://notbyai.fyi/) on the footer of all pages on 2024-01-05:

Paul Watson put a general disclaimer about no use of LLMs for his site on his blog homepage on 2024-01-04 and also a similar statement and button (by https://notbyai.fyi/) on the footer of all pages on 2024-01-05:

No large language models (LLM) or similar AI technologies were involved in writing the blog posts on here.

- to2ds Updated the existing blurb above the fold on the homepage to use the term "LLMs" instead of the generic "AI." [1] on 2024-01-05. Also added (Not BY AI) written and painted badges to the common footer along with the IndieWeb badge and link.

fluffy Added a blurb to their about page on 2025-07-23.

fluffy Added a blurb to their about page on 2025-07-23.

Dr. Matt Lee blocks as much of this as possible. His content is not permitted to be used to train such things. His Libre.fm and 1800www.com projects are changing their mission/focus as result.

Dr. Matt Lee blocks as much of this as possible. His content is not permitted to be used to train such things. His Libre.fm and 1800www.com projects are changing their mission/focus as result.

Joe Crawford has a slash page under "/ai" (/artlung.com/ai) describing his usage and positions on large language models and related technology.

- Add yourself here… (see this for more details)

IndieWeb Wiki Examples

Do not use LLMs for content for the wiki:

- in definitions: definition#Do_not_copy_from_LLM_generated_text

- or content in general: wikifying#Do_not_copy_from_LLM_generated_text

Don't even think about using ChatGPT to contribute material to the wiki, because you don't have the ability to know you can contribute it to the public domain / CC0.

- 2024-01-08 The Guardian UK: ‘Impossible’ to create AI tools like ChatGPT without copyrighted material, OpenAI says

Other Examples

- IETF example: https://www.rfc-editor.org/rfc/rfc9518.html#appendix-A-4

No large language models were used in the production of this document.

- https://notbyai.fyi/

IndieWeb opinions

- 2023-03-13

Tantek Çelik: Blog as if there’s an #AI being trained to be you based on your blog posts.

Tantek Çelik: Blog as if there’s an #AI being trained to be you based on your blog posts. - 2026-01-31 Coyote: How LLMs & Chatbots Are Bad For the Indie Web

- Add yourself here… (see this for more details)

Other Examples

This section is a stub. You can help the IndieWeb wiki by expanding it.

- News publication example: https://www.sfchronicle.com/ai_use/

- ...

Criticism

Hammering bandwidth while disrespecting robots.txt

- 2025-04-15 Denial

The worst of the internet is continuously attacking the best of the internet. This is a distributed denial of service attack on the good parts of the World Wide Web.

Over the past few months, instead of working on our priorities at SourceHut, I have spent anywhere from 20-100% of my time in any given week mitigating hyper-aggressive LLM crawlers at scale. [...] If you think these crawlers respect robots.txt then you are several assumptions of good faith removed from reality. These bots crawl everything they can find, robots.txt be damned.

Encourages disregarding copyright

- https://t.co/1rmFNq7spR short link to 2024-04-06 NYT: How Tech Giants Cut Corners to Harvest Data for A.I. / OpenAI, Google and Meta ignored corporate policies, altered their own rules and discussed skirting copyright law as they sought online information to train their newest artificial intelligence systems.

- 2024-04-30 NYTimes: 8 Daily Newspapers Sue OpenAI and Microsoft Over A.I. / The suit, which accuses the tech companies of copyright infringement, adds to the fight over the online data used to power artificial intelligence.

Encourages training on private data

- https://twitter.com/heyitsseher/status/1776823362292969880

Google quietly changed policies to scrape public Google Doc data to train AI.

Then purposely released new terms of service on "Fourth of July weekend, when people were typically focused on the holiday"

How Tech Giants Cut Corners to Harvest Data for A.I. https://t.co/1rmFNq7spR

Discourages open creativity and sharing

- 2024-03-11

Les Orchard: Dance like the bots aren't watching? / TL;DR: Why bother sharing anything on the open web if it's just going to be fodder for extractive, non-reciprocal bots?

Les Orchard: Dance like the bots aren't watching? / TL;DR: Why bother sharing anything on the open web if it's just going to be fodder for extractive, non-reciprocal bots?

- 2024-04-22 The Atlantic: It’s the End of the Web as We Know It

SEO will morph into LLMO: large-language-model optimization, the incipient industry of manipulating AI-generated material to serve clients’ interests.

[...]

LLMs aren’t people we connect with. Eventually, people may stop writing, stop filming, stop composing—at least for the open, public web.

Labor Impact

- 2026-02-09 : AI Doesn’t Reduce Work—It Intensifies It

Some workers described realizing, often in hindsight, that as prompting during breaks became habitual, downtime no longer provided the same sense of recovery. (...) Many workers noted that they were doing more at once—and feeling more pressure—than before they used AI, even though the time savings from automation had ostensibly been meant to reduce such pressure.

- 2026-02-04 : Companies replaced entry-level workers with AI. Now they are paying the price

- 2026-01 : AI at work is anti-labor by design

- 2026-01-27 : Pinterest cuts workforce by around 15 percent to focus on AI

- 2026-01-07 : AI layoffs are looking more and more like corporate fiction that’s masking a darker reality, Oxford Economics suggests (archived)

- 2025-10-23 : The AI Gold Rush Is Cover for a Class War

- 2025-06-25 : AI Killed My Job: Tech workers

The title is somewhat tongue-in-cheek; we recognize that AI is not sentient, that it’s management, not AI, that fires people, but also that there are many ways that AI can “kill” a job, by sapping the pleasure one derives from work, draining it of skill and expertise, or otherwise subjecting it to degradation.

I heard from workers who recounted how managers used AI to justify laying them off, to speed up their work, and to make them take over the workload of recently terminated peers. I heard from workers at the biggest tech giants and the smallest startups—from workers at Google, TikTok, Adobe, Dropbox, and CrowdStrike, to those at startups with just a handful of employees. I heard stories of scheming corporate climbers using AI to consolidate power inside the organization. I heard tales of AI being openly scorned in company forums by revolting workers. And yes, I heard lots of sad stories of workers getting let go so management could make room for AI. I received a message from one worker who wrote to say they were concerned for their job—and a follow-up note just weeks later to say that they’d lost it.

- 2025-05-22 : Klarna’s AI replaced 700 workers. It now wants some of them back to improve customer service

- 2025-05-14 : Klarna CEO says AI helped company shrink workforce by 40%

- 2025-04-28 : Duolingo will replace contract workers with AI

Abused to waste developer time

Criticism: LLMs used to waste developer time with fake security bounty bug reports:

- (from the maintainer of curl) 2024-01-02 The I in LLM stands for intelligence

Like for the email spammers, the cost of this ends up in the receiving end. The ease of use and wide access to powerful LLMs is just too tempting. I strongly suspect we will get more LLM generated rubbish in our Hackerone inboxes going forward.

Fails basic context dependence

Can fail basic context-dependence, like examples vs commands:

Scraping for training widely rejected

85% of Cloudflare's customers prefer to block GenAI training scrapers:

Allows the creation of abusive content for deliberate harm

- 2025-05-22 Someone released an AI model that makes deepfakes of me, without my consent by Jingna Zhang

Risk of misleading or hazardous output

- 2025-05-07 Machine-Generated Garbage Hall of Shame

Other Criticism

- 2025-10-22 : AI browsers are straight out of the enshittification playbook

- 2025-05-20 : ChatGPT and the proliferation of obsolete and broken solutions to problems we hadn’t had for over half a decade before its launch

- 2025-08-06 : None of this is real and it doesn’t matter (archived)

- 2025-05-22 : Anthropic’s new AI model turns to blackmail when engineers try to take it offline (archived)

- 2025:

Jeremy Keith had to turn off the tags feature on Huffduffer due to AI scraping (more info)

Jeremy Keith had to turn off the tags feature on Huffduffer due to AI scraping (more info)

- 2024-11-05 : Information literacy and chatbots as search (archived)

“Setting things up so that you get "the answer" to your question cuts off the user's ability to do the sense-making that is critical to information literacy. That sense-making includes refining the question, understanding how different sources speak to the question, and locating each source within the information landscape.”

“Let me get this straight,” John Mulaney said. “You’re hosting a ‘future of AI’ event in a city that has failed humanity so miserably?”

He added, “Can AI sit there in a fleece vest? Can AI not go to events and spend all day at a bar?”

- Don't write fan letters using GenAI, unlike what the Google Gemini ad is encouraging you to do:

- 2024-07-28 : Dear Google, who wants an AI-written fan letter? (archived)

See Also

- https://en.wikipedia.org/wiki/Large_language_model

- ai;dr

- https://github.com/ai-robots-txt/ai.robots.txt

- How to turn off training Grok on your tweets: uncheck this setting: https://twitter.com/settings/grok_settings

- autocorrect

- 2022-11-13 comic: By Bots, For Bots

- 2024-09-20

Tantek Çelik: Can we have CC-NT licenses for no-training (ML/LLM, GenAI in general), just like we have CC-NC for non-commercial?

Tantek Çelik: Can we have CC-NT licenses for no-training (ML/LLM, GenAI in general), just like we have CC-NC for non-commercial? - https://www.aitrainingstatement.org/

- "I’m intrigued and bored at the same time: I find it quickly becomes quite tedious. I have a sort of inner dissatisfaction when I play with it, a little like the feeling I get from eating a lot of confectionery when I’m hungry. I suspect this is because the joy of art isn’t only the pleasure of an end result but also the experience of going through the process of having made it." AI’s Walking Dog by Brian Eno

- ai.txt

- blog about GenAI / LLM related failures and/or organizations seemingly doing silly things with such technologies: https://pivot-to-ai.com/

- List of LLM bot user agents

- Criticism: visual design rut, popular LLM services seem to have converged on an indistinguishable commodity user interface (on the plus side, learning one means you learn how to use the others) https://www.flickr.com/gp/aaronpk/5C4ETw6zi6

- Criticism: Are AI Bots Knocking Cultural Heritage Offline?

- large language model traffic

- https://cartography-of-generative-ai.net

- Wikipedia:WikiProject AI Cleanup

- Criticism: slows down experts coding: 2025-10-22 How AI slows me down